Introduction

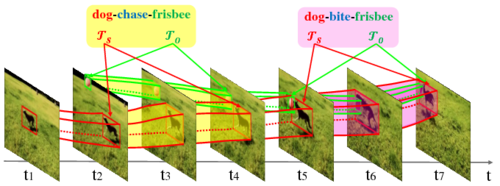

As a bridge to connect vision and language, visual relations between objects such as “person-touch-dog” and “cat-above-sofa” provide a more comprehensive visual content understanding beyond objects. Video Visual Relation Detection (VidVRD) aims to detect instances of visual relations of interest in a video, where a visual relation instance is represented by a relation triplet <subject, predicate, object> with the trajectories of the subject and object (as shown in Figure 1). As compared to still images, videos provide a more natural set of features for detecting visual relations, such as the dynamic relations like “A-follow-B” and “A-towards-B”, and temporally changing relations like “A-chase-B” followed by “A-hold-B”. Yet, VidVRD is technically more challenging than ImgVRD due to the difficulties in accurate object tracking and diverse relation appearances in the video domain.

We release the first dataset, namely ImageNet-VidVRD, in order to facilitate innovative researches on the problem. The dataset contains 1,000 videos selected from ILVSRC2016-VID dataset based on whether the video contains clear visual relations. It is split into 800 training set and 200 test set, and covers common subject/objects of 35 categories and predicates of 132 categories. Ten people contributed to labeling the dataset, which includes object trajectory labeling and relation labeling. Since the ILVSRC2016-VID dataset has the object trajectory annotation for 30 categories already, we supplemented the annotations by labeling the remaining 5 categories. In order to save the labor of relation labeling, we labeled typical segments of the videos in the training set and the whole of the videos in the test set. Several statistics of the dataset are shown in below.